Merge branch 'master' into 'master'

# Conflicts: # doc/RELEASE_NOTES.txt

No related branches found

No related tags found

Showing

- Installation/APSDeveloperInstallation.md 308 additions, 6 deletionsInstallation/APSDeveloperInstallation.md

- Installation/DataManagementSplitSystemSetup.md 482 additions, 0 deletionsInstallation/DataManagementSplitSystemSetup.md

- Installation/images/firewall-setup.png 0 additions, 0 deletionsInstallation/images/firewall-setup.png

- Installation/images/typical_install_dir.png 0 additions, 0 deletionsInstallation/images/typical_install_dir.png

- RELEASE_NOTES.txt 11 additions, 5 deletionsRELEASE_NOTES.txt

- getting_started/getting-started.md 251 additions, 0 deletionsgetting_started/getting-started.md

- getting_started/gettingDataFromGlobus.md 39 additions, 0 deletionsgetting_started/gettingDataFromGlobus.md

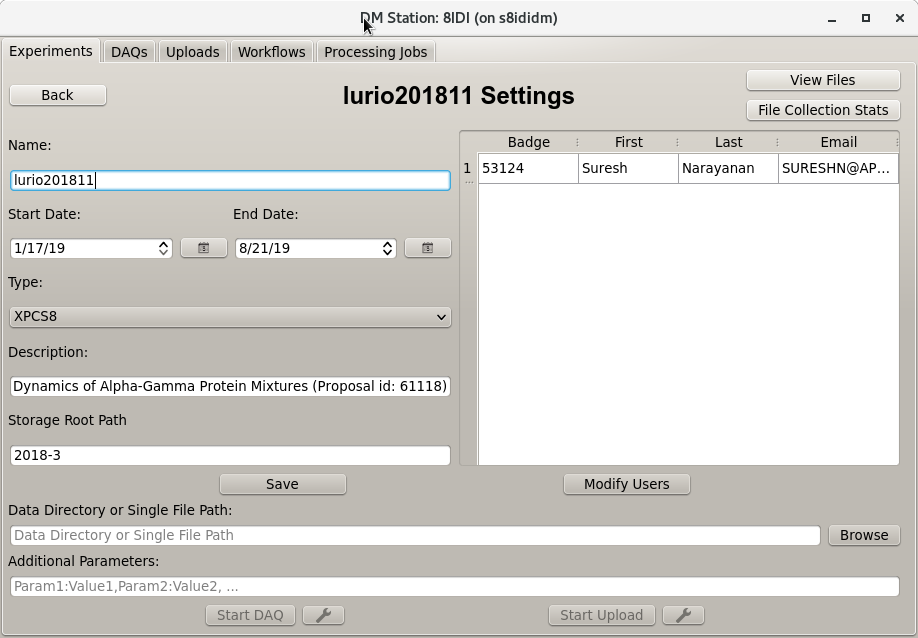

- getting_started/images/dm-station-gui-experiments-detail.png 0 additions, 0 deletionsgetting_started/images/dm-station-gui-experiments-detail.png

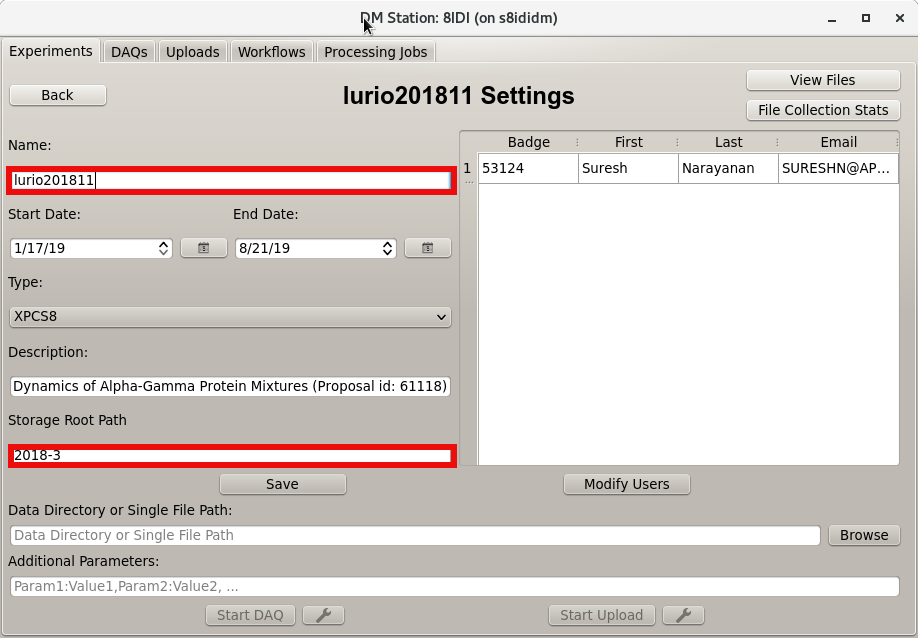

- getting_started/images/dm-station-gui-experiments-file-location-items.png 0 additions, 0 deletions...images/dm-station-gui-experiments-file-location-items.png

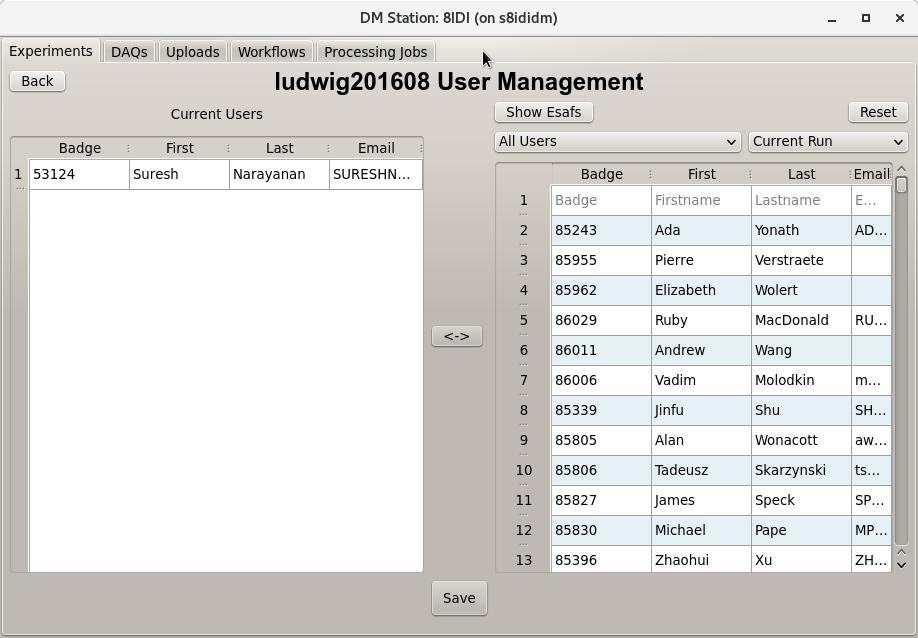

- getting_started/images/dm-station-gui-experiments-user-management.png 0 additions, 0 deletions...ted/images/dm-station-gui-experiments-user-management.png

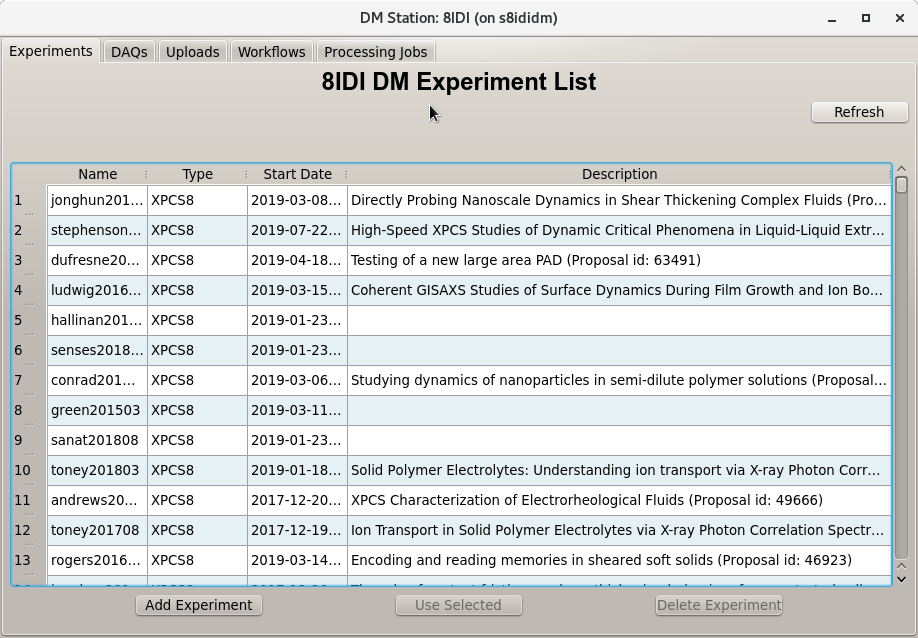

- getting_started/images/dm-station-gui-experiments.png 0 additions, 0 deletionsgetting_started/images/dm-station-gui-experiments.png

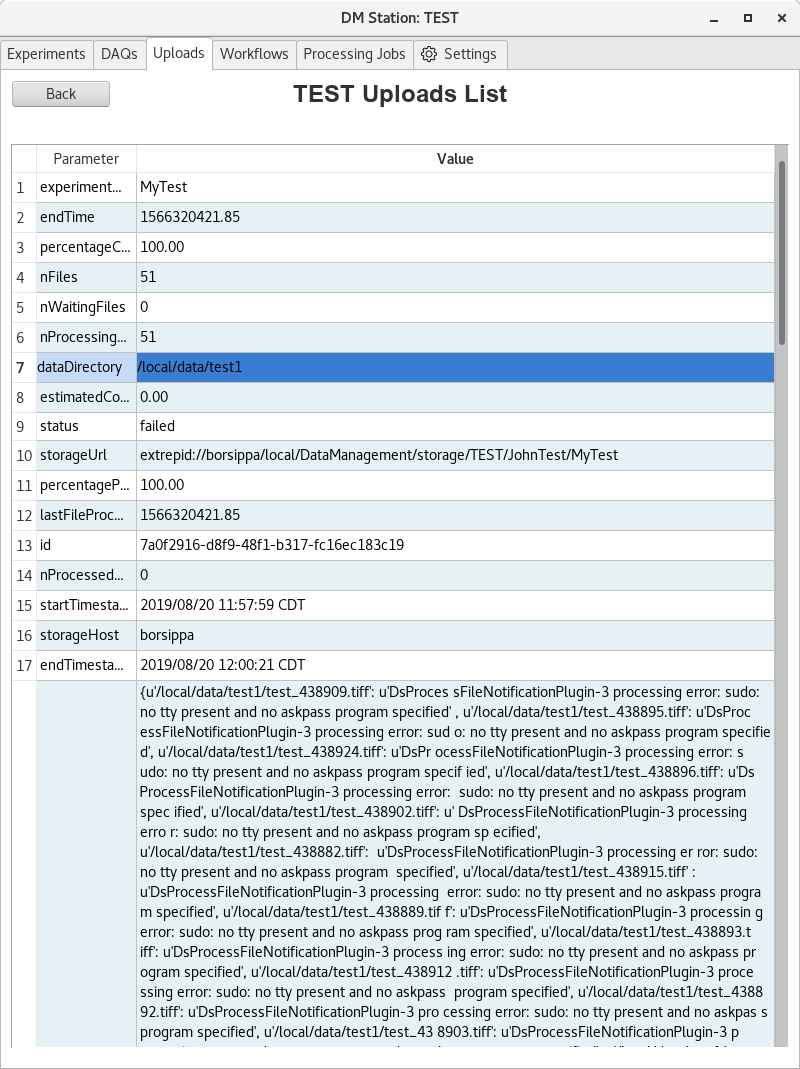

- getting_started/images/dm-station-gui-uploads-detail.png 0 additions, 0 deletionsgetting_started/images/dm-station-gui-uploads-detail.png

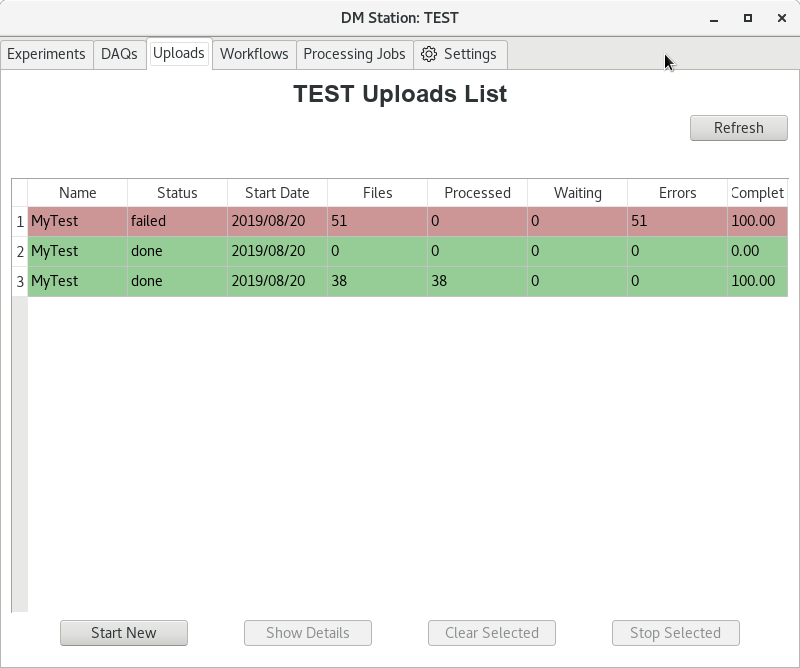

- getting_started/images/dm-station-gui-uploads.png 0 additions, 0 deletionsgetting_started/images/dm-station-gui-uploads.png

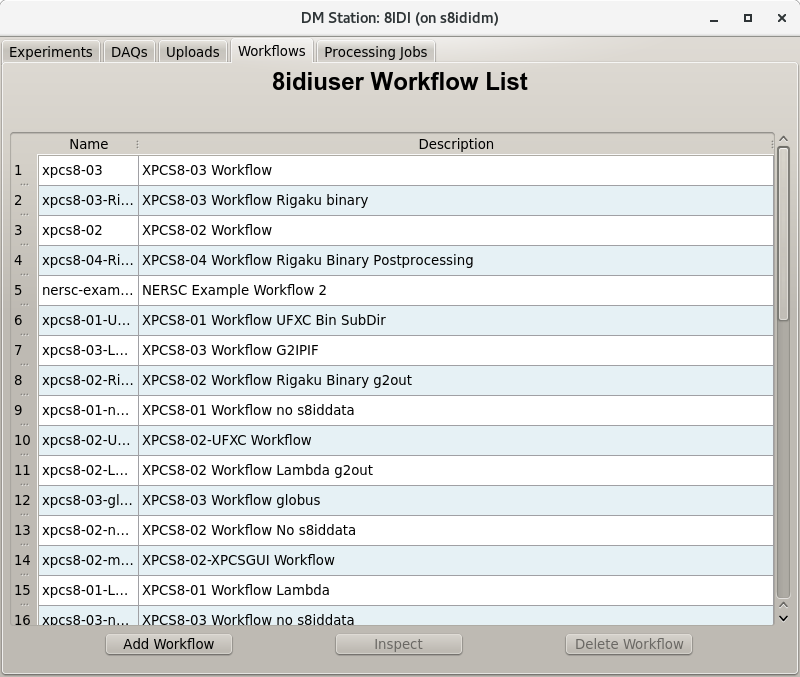

- getting_started/images/dm-station-gui-workflows.png 0 additions, 0 deletionsgetting_started/images/dm-station-gui-workflows.png

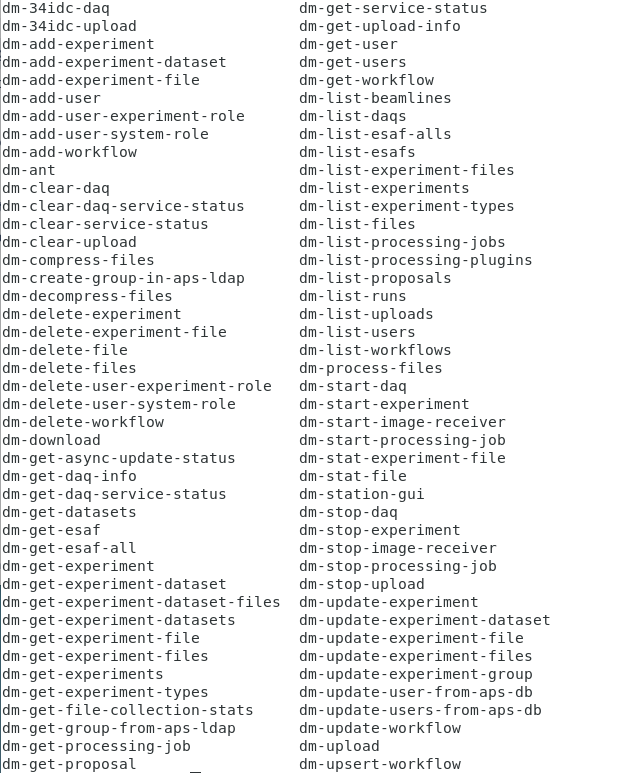

- getting_started/images/dm-system-commands.png 0 additions, 0 deletionsgetting_started/images/dm-system-commands.png

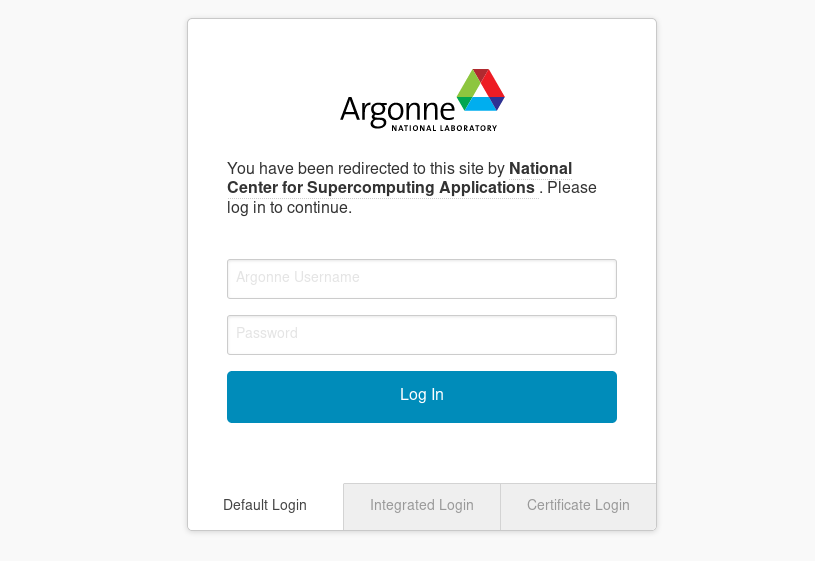

- getting_started/images/globus-anl-login.png 0 additions, 0 deletionsgetting_started/images/globus-anl-login.png

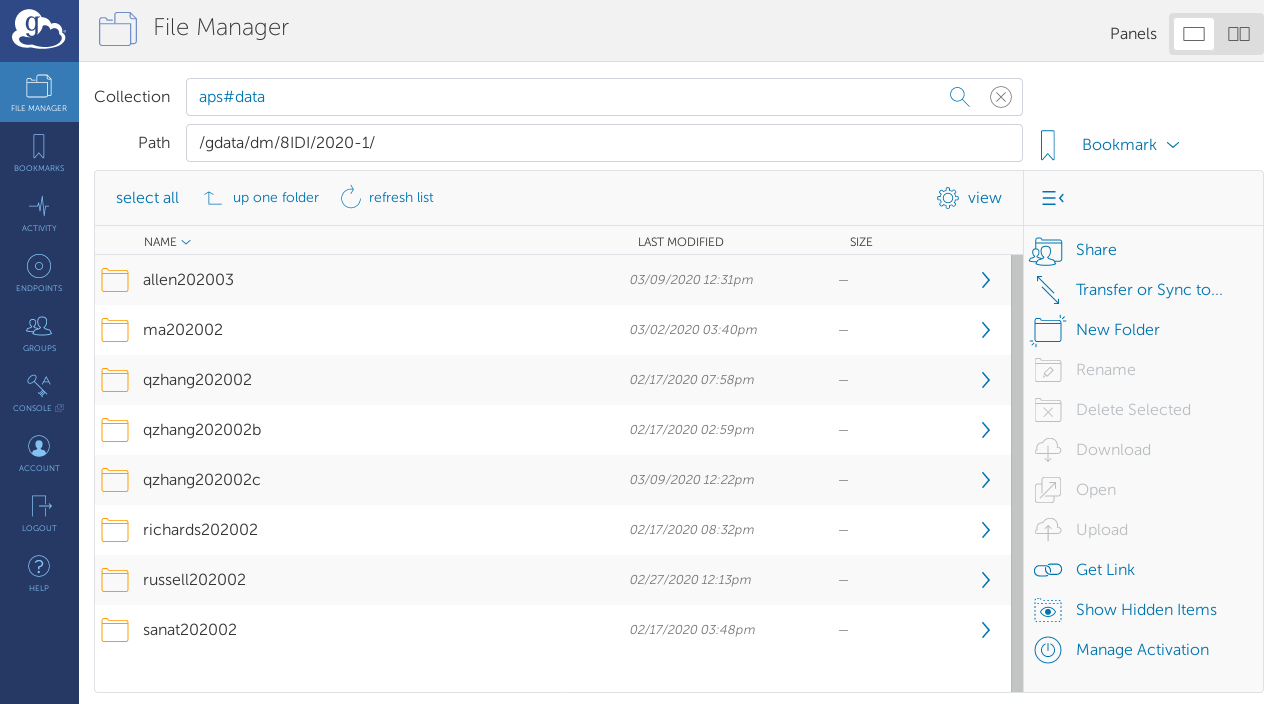

- getting_started/images/globus-beamline-directory.png 0 additions, 0 deletionsgetting_started/images/globus-beamline-directory.png

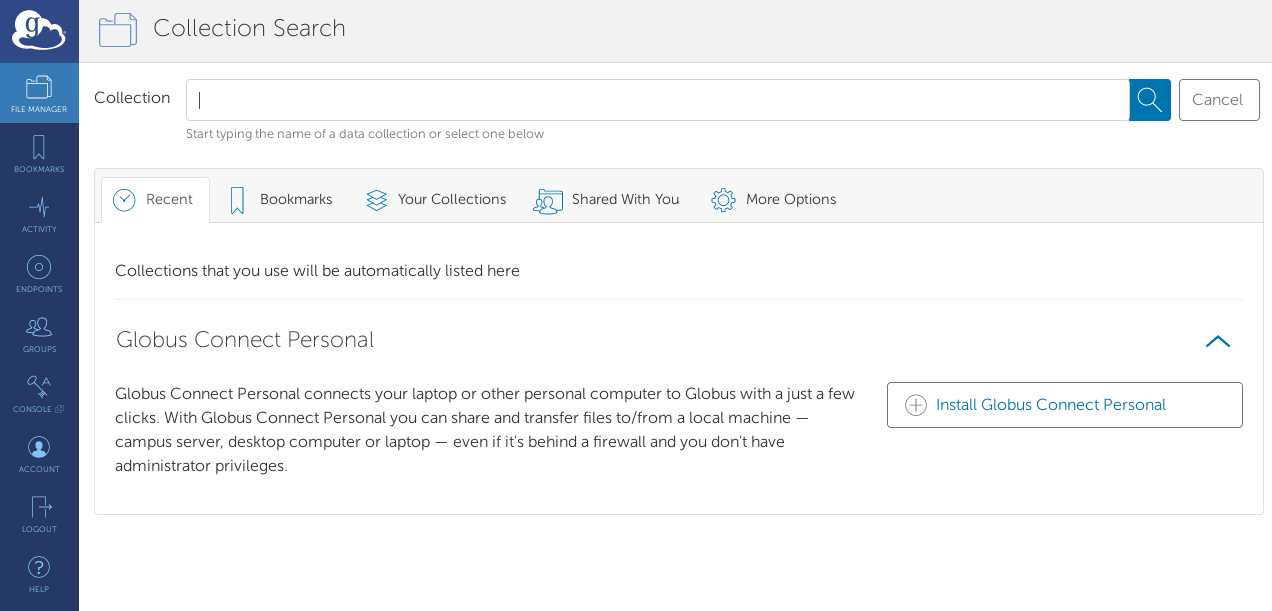

- getting_started/images/globus-collection-page.png 0 additions, 0 deletionsgetting_started/images/globus-collection-page.png

- getting_started/images/globus-dm-authenticate,png 0 additions, 0 deletionsgetting_started/images/globus-dm-authenticate,png

- getting_started/images/globus-dm-authenticate.png 0 additions, 0 deletionsgetting_started/images/globus-dm-authenticate.png

This diff is collapsed.

This diff is collapsed.

Installation/images/firewall-setup.png

0 → 100644

133 KiB

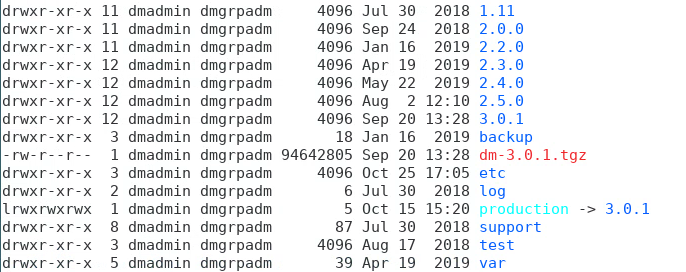

Installation/images/typical_install_dir.png

0 → 100644

177 KiB

getting_started/getting-started.md

0 → 100644

getting_started/gettingDataFromGlobus.md

0 → 100644

84.1 KiB

81.7 KiB

96.2 KiB

147 KiB

149 KiB

38.6 KiB

127 KiB

147 KiB

getting_started/images/globus-anl-login.png

0 → 100644

27.3 KiB

93.4 KiB

68.5 KiB

File added

73.7 KiB