Commits on Source (26)

-

sveseli authored922034a3

-

sveseli authored27e76ee0

-

sveseli authoredd41fe0b4

-

sveseli authored

# Conflicts: # doc/RELEASE_NOTES.txt

70dca8de -

hammonds authored

Rsync SSH Key Enhancements See merge request DM/data-management!8

c9e93dfc -

hammonds authored26e4ac6b

-

hammonds authored8c9a2ce9

-

hammonds authorede914a553

-

Hannah Parraga authored40310696

-

Hannah Parraga authored3e23e937

-

Hannah Parraga authored5f5725c5

-

hparraga authoredad55701e

-

hparraga authoredac06f75f

-

hparraga authored7791d5a7

-

hparraga authored05bc7e28

-

hparraga authored1fe6c0fb

-

hparraga authored45aed14f

-

hparraga authored4ed0b85d

Showing

- Installation/APSDeveloperInstallation.md 0 additions, 391 deletionsInstallation/APSDeveloperInstallation.md

- Installation/APSSectorDeployment.md 0 additions, 1 deletionInstallation/APSSectorDeployment.md

- Installation/DataManagementSplitSystemSetup.md 0 additions, 482 deletionsInstallation/DataManagementSplitSystemSetup.md

- Installation/images/firewall-setup.png 0 additions, 0 deletionsInstallation/images/firewall-setup.png

- Installation/images/typical_install_dir.png 0 additions, 0 deletionsInstallation/images/typical_install_dir.png

- Makefile 0 additions, 6 deletionsMakefile

- RELEASE_NOTES.txt 13 additions, 1 deletionRELEASE_NOTES.txt

- beamline_names.txt 2 additions, 0 deletionsbeamline_names.txt

- demo/apsu-20150709/demo_notes.sv.txt 0 additions, 219 deletionsdemo/apsu-20150709/demo_notes.sv.txt

- demo/apsu-20150709/machine_prep_notes.sv.txt 0 additions, 147 deletionsdemo/apsu-20150709/machine_prep_notes.sv.txt

- demo/sdm-20150716/demo_notes.sv.txt 0 additions, 159 deletionsdemo/sdm-20150716/demo_notes.sv.txt

- demo/sdm-20150716/machine_prep_notes.sv.txt 0 additions, 147 deletionsdemo/sdm-20150716/machine_prep_notes.sv.txt

- demo/sprint-20150421/demo_notes.sv.txt 0 additions, 209 deletionsdemo/sprint-20150421/demo_notes.sv.txt

- demo/sprint-20150630/demo_notes.sv.txt 0 additions, 253 deletionsdemo/sprint-20150630/demo_notes.sv.txt

- dm_test_system_deployment_notes.txt 0 additions, 42 deletionsdm_test_system_deployment_notes.txt

- getting_started/getting-started.md 1 addition, 250 deletionsgetting_started/getting-started.md

- getting_started/gettingDataFromGlobus.md 1 addition, 78 deletionsgetting_started/gettingDataFromGlobus.md

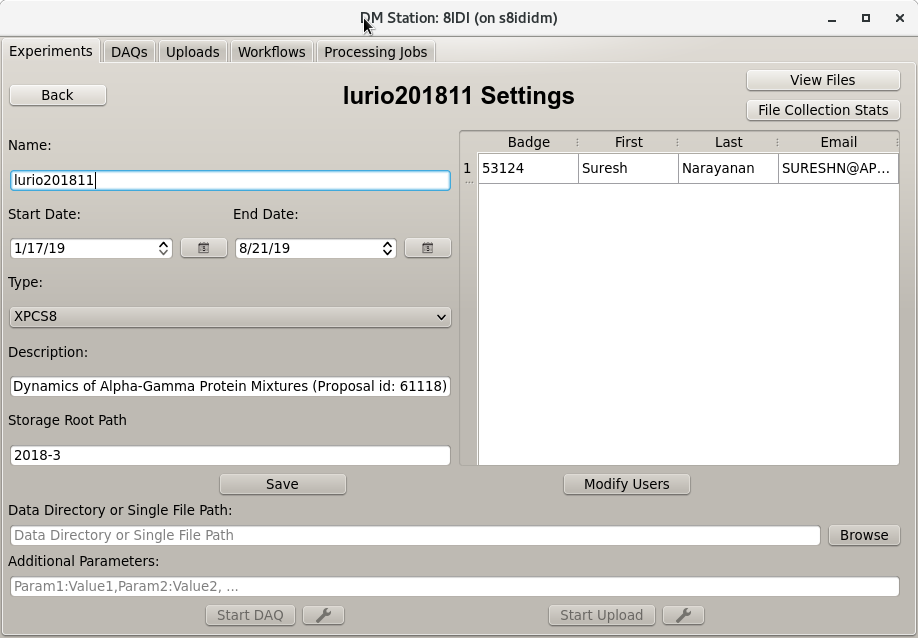

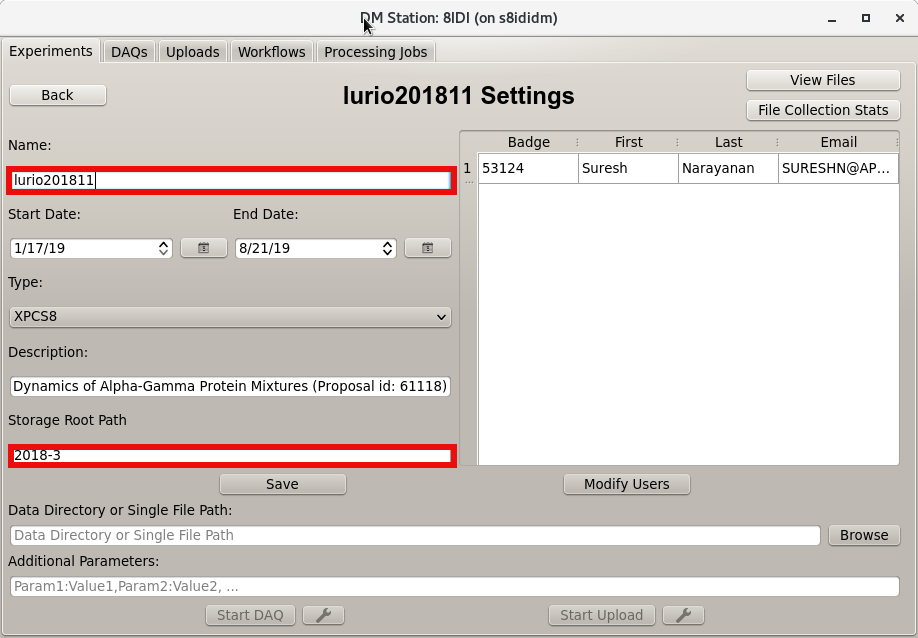

- getting_started/images/dm-station-gui-experiments-detail.png 0 additions, 0 deletionsgetting_started/images/dm-station-gui-experiments-detail.png

- getting_started/images/dm-station-gui-experiments-file-location-items.png 0 additions, 0 deletions...images/dm-station-gui-experiments-file-location-items.png

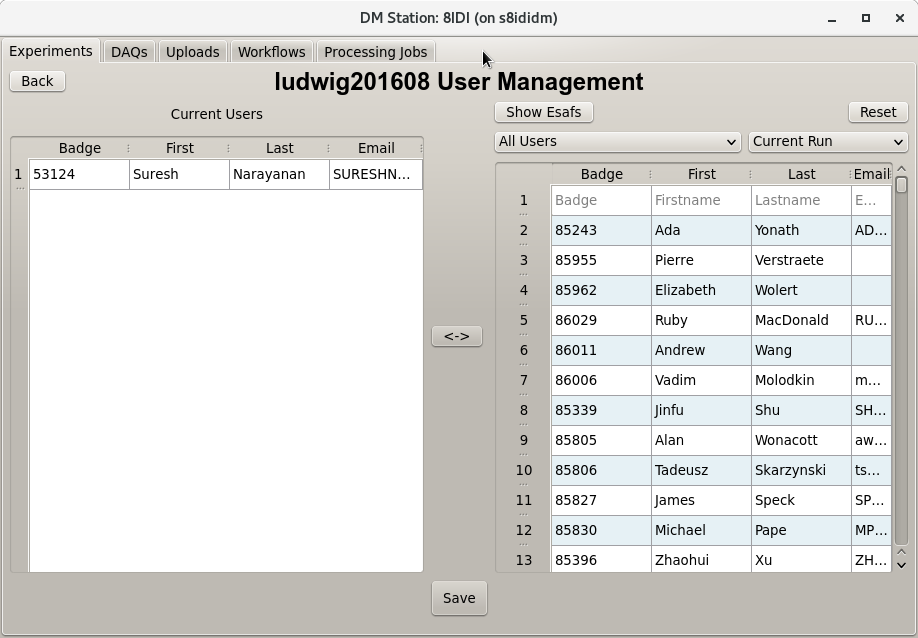

- getting_started/images/dm-station-gui-experiments-user-management.png 0 additions, 0 deletions...ted/images/dm-station-gui-experiments-user-management.png

Installation/APSSectorDeployment.md

deleted

100644 → 0

133 KiB

177 KiB

Makefile

deleted

100644 → 0

demo/apsu-20150709/demo_notes.sv.txt

deleted

100644 → 0

demo/sdm-20150716/demo_notes.sv.txt

deleted

100644 → 0

dm_test_system_deployment_notes.txt

deleted

100644 → 0

84.1 KiB

81.7 KiB

96.2 KiB